Concept

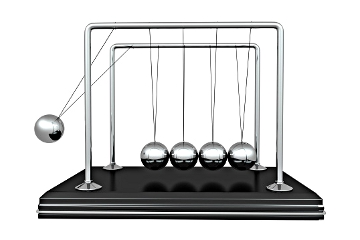

In this assignment, I wanted to simulate a dynamic environment filled with free-moving, colorful balls. The core idea was to blend the principles of physics using MatterJS with an engaging visual display, creating an environment where these balls react to generated obstacles, exhibiting a blend of expressiveness and structured behavior.

Implementation

- Ball Behavior: Each ball follows the basic laws of physics, including gravity and collision response, giving it a natural and fluid movement within the canvas.

- Dynamic Interaction: The balls respond to user input, where pressing the spacebar removes the latest added obstacle, offering a degree of control over the environment. Furthermore, the user can click on two points on the canvas to create a line, which serves as an obstacle for the balls.

- Obstacle Interaction: The balls dynamically detect and react to line obstacles, changing their trajectory upon collision, thereby enhancing the realism of their movement patterns.

- Visual Aesthetics: To create a visually captivating display, each ball changes its color based on its vertical position within the canvas, offering a vibrant and ever-changing visual experience.

Instructions

- Creating Platforms: Click once on the canvas to set the starting point of a platform. Click a second time to set its endpoint and create the platform. The platform will appear as a line between these two points. Once a platform is created, it acts as an obstacle in the environment. You can create multiple platforms by repeating the click process.

- Removing Platforms: Press the spacebar to remove the most recently created platform. Each press of the spacebar will remove the latest platform, one at a time.

- Spawning Balls: Balls are automatically spawned at regular intervals at the top center of the canvas. They interact with gravity and the platforms, showcasing the physics simulation.

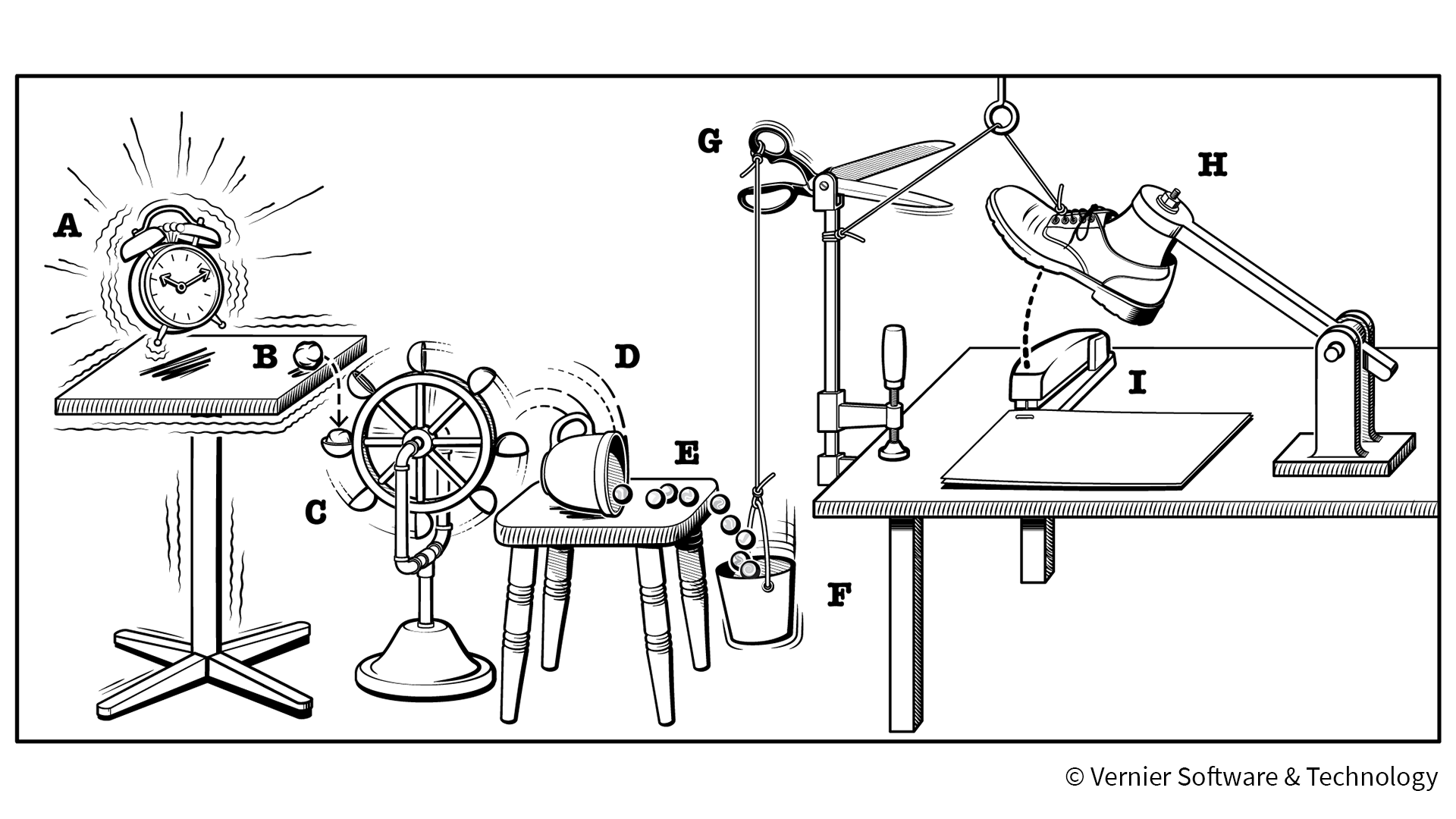

Sketch

Code

function mousePressed() {

if (!currentPlatform) {

currentPlatform = { start: createVector(mouseX, mouseY), end: null, body: null };

} else if (!currentPlatform.end) {

currentPlatform.end = createVector(mouseX, mouseY);

createPlatform(currentPlatform);

platforms.push(currentPlatform);

currentPlatform = null;

}

}

On the first click, it records the starting point of a new platform. On the second click, it sets the platform’s endpoint, creates the platform using these points, and adds it to an array of platforms. The process then resets, allowing for the creation of additional platforms with further clicks.

function createPlatform(platform) {

let centerX = (platform.start.x + platform.end.x) / 2;

let centerY = (platform.start.y + platform.end.y) / 2;

let length = dist(platform.start.x, platform.start.y, platform.end.x, platform.end.y);

let angle = atan2(platform.end.y - platform.start.y, platform.end.x - platform.start.x);

platform.body = Matter.Bodies.rectangle(centerX, centerY, length, 10, { isStatic: true });

Matter.Body.setAngle(platform.body, angle);

World.add(world, platform.body);

platform.width = length;

}

- Calculate Platform’s Center and Angle: It finds the midpoint (

centerX, centerY) and angle (angle) between two points specified by the user. This determines where and how the platform will be positioned and oriented.

- Create Matter.js Body for the Platform: A static rectangle (

Matter.Bodies.rectangle) representing the platform is created at the calculated center with the specified length and a fixed height of 10 pixels.

- Add Platform to the World: The platform is then added to the Matter.js world (

World.add) and its width is stored, making it part of the physical simulation and visible in the canvas.

The following code snippet demonstrates how each ball’s color is determined by its vertical position:

balls.forEach(ball => {

// Map y position to hue

let hue = map(ball.position.y, 0, height, 0, 360) % 360;

// Set color

fill(360-hue, 100, hue);

noStroke();

// Draw the ball with fixed size

ellipse(ball.position.x, ball.position.y, ball.circleRadius * 2, ball.circleRadius * 2);

});

Challenges

- Rendering Challenges: One of the main challenges in this project was ensuring that the rendering of the balls and obstacles accurately matched their physical simulations. Achieving a seamless visual representation that aligns with the physics engine’s calculations required meticulous adjustments, particularly in synchronizing the balls’ movement and obstacle interactions within the canvas.

- Platform Creation Logic: Crafting the logic for creating and positioning platforms dynamically based on user input presented its own set of challenges. It involved calculating the correct placement and orientation of each platform to behave as expected within the physics environment.

Future Improvements

- Enhanced User Interaction: Future iterations could explore more intricate user interactions, such as enabling users to modify the direction and strength of gravity or interactively change the properties of the balls.

- Dynamic Obstacles and Environment: Introducing elements like moving obstacles or zones with different gravitational pulls could add more layers of complexity and engagement to the simulation.

- Implementing Sounds during collisions: Adding sounds to the balls when colliding with the platforms can add another dimension to the sketch. Especially if the sounds played are somehow linked to the position of the platform, which will turn the sketch into a visual music maker.