Concept:

My concept actually came from my recent obsession of a Korean historic drama series. I always harbored huge passion in the traditional art of Korea because my childhood consisted of visiting museums and palaces in Seoul, so the traditional arts and music of Korea was always dear to my heart. Last year in my Intro to IM class, I wanted to recreate the Korean traditional patterns for my final project, but because I had limited knowledge in p5.js back then compared to now, I gave up the idea. However, this time, I wanted to challenge myself and make my project based on this, especially while I felt motivated to do so because I was obsessed with a historical drama.

Another feature that I wanted to implement for a long time was having users be able to manipulate audio as well, and this gave me the idea of the final project I came up with, which was: having the users control and create their own visual and music by having both the sketch and the audio be interactive with the theme of introducing the Korean traditional art.

I thought it’d be easier to show Korean traditional art if I could have a basic “background layout” for both the audio and visual aspect.

For the visual aspect, I decided to have a backdrop of a Korean traditional landscape that is called Irworobongdo, which is a folding screen with peaks, sun, moon, etc. painted on it that was set behind the king’s throne during the Joseon dynasty, and have different coded aspects such as flowers, trees, fireflies, etc. “grow” or be added on top of that background.

As for the audio, I decided to use a Korean traditional song named 比翼連里, because I thought the melody and the general atmosphere fits the visuals of my sketch well.

Process:

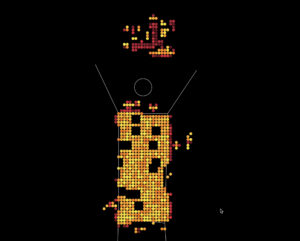

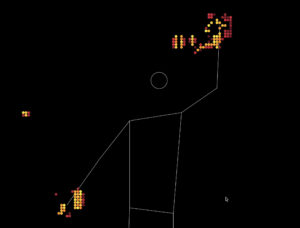

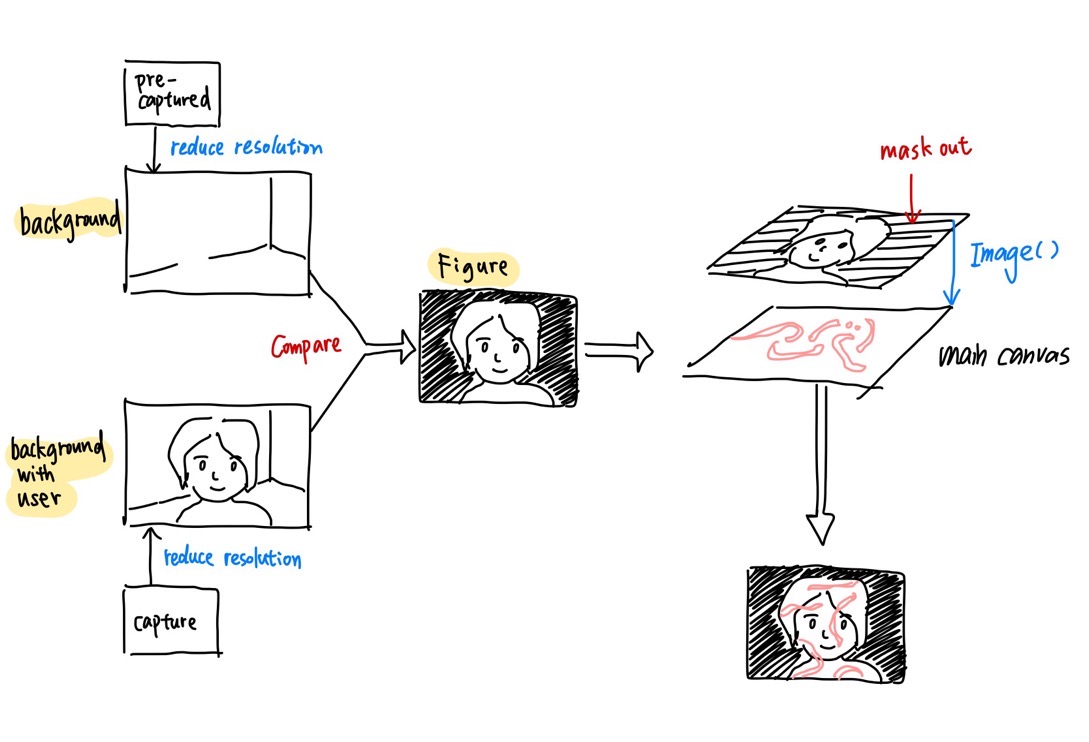

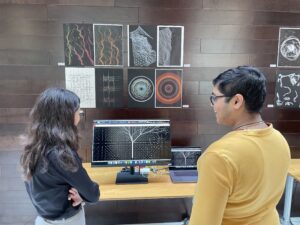

Here are a few different images of various renditions I tried out before settling with my final concept and sketch:

I also included all the trials I’ve tried in detail in my last post, which you can read here.

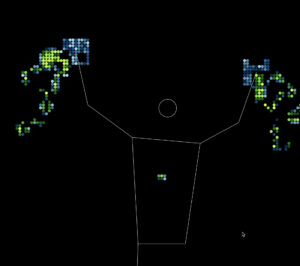

The final sketch layout I settled with last week was this:

After I settled on the basic layout of my sketch, I set out to make progress from my previous post’s sketch progress, for which I did the following:

- Making the branches so that they won’t grow to be that long (I don’t really like how long they’re growing because it loses its original shape) –> I adjusted the below code snippet to 0.7 from 0.9 to decrease the lengths of the branches A and B.

branchA() {

let dir = p5.Vector.sub(this.end, this.begin);

dir.rotate(PI / 4);

dir.mult(0.7); // adjust this to adjust the lengths of the branches

- Implementing audio files and linking them to the keyboard –> multiple different snippets of sound from varying traditional instruments that are triggered by different keys on the keyboard, so that they form a harmony as the user generates more flowers onto the canvas. This was honestly the most important and urgent feature that I was nervous to experiment with, for I wasn’t sure how it was going to work because I never tried it before.

Here are the audio files that I’ve used:

The main track that plays when the sketch opens

Sound of janggu (I added two versions of this)

Sound of percussion triangle

Sound of rainstick

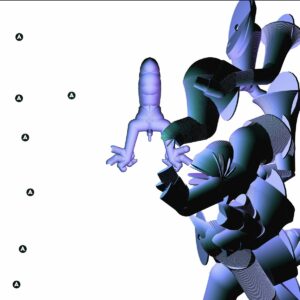

I first made a demo sketch before implementing it into my actual sketch because I wanted to focus on testing the audio only; below is the demo sketch with each A, B, C, D, and E key triggering respective audio files:

(you can try pressing the canvas once first, and then press any of the A, B, C, D, E keys on your keyboard, and make sure they’re capitalized when you do so. doing so will play the corresponding audio.)

A code highlight of this demo was the function keyPressed () and the details that I learned to implement inside this function.

function keyPressed() {

// Check if the key corresponds to a loaded audio file

if (key === 'A') {

// Play or stop the audio file based on its current state

if (A.isPlaying()) {

A.stop();

} else {

A.play();

}

} else if (key === 'B') {

if (B.isPlaying()) {

B.stop();

} else {

B.play();

}

Because I wanted the users to be able to control the sounds, I implemented the if/else function so that once the key was pressed once, the audio will play, and when pressed again, it’ll pause.

Once this was done and I was sure it’ll work smoothly, I added the code to my actual sketch.

After this, I decided that adding a page of instructions in my code will be helpful, so I wrote the basic instructions and had it appear before the actual sketch began running. For this, I took my last year’s Intro to IM final project code’s homepage portion and changed the properties accordingly, which was the code snippet below:

// Declare variables for home screen

let startButton;

// Initialize home screen

function initializeHomeScreen() {

// Create start button

startButton = createButton('Start');

startButton.position(width / 2 - 30 , height -250);

startButton.mousePressed(startMainCode);

}

// Draw home screen

function drawHomeScreen() {

background(255); // Set background color for home screen

textSize(15);

fill(0);

textAlign(CENTER, CENTER);

// Display instructions

let textY = height / 4;

text('Welcome! Here are some basic instructions before you begin:', width / 2, textY);

...(REST OF THE INSTRUCTIONS)

startButton.show();

}

12/7 In-Class User Testing & Progress Since Then:

Here are suggestions I got from my classmates during the Thursday class, which I thought were very helpful:

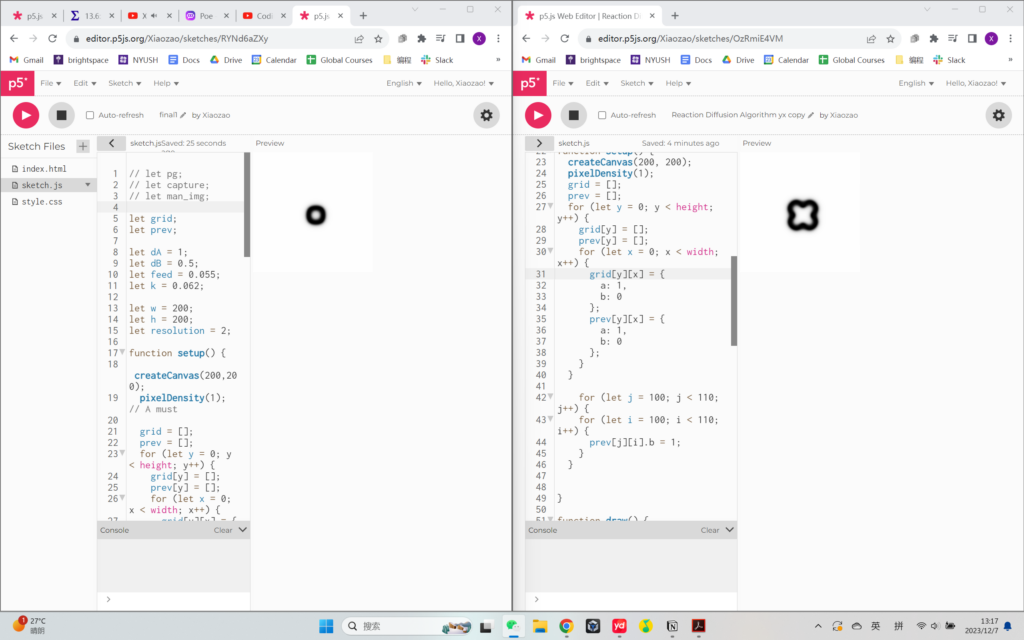

- Give more options for background

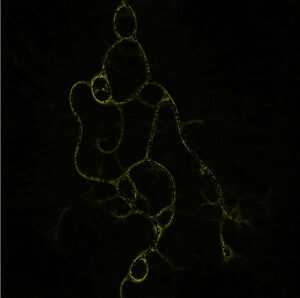

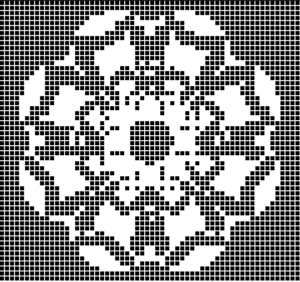

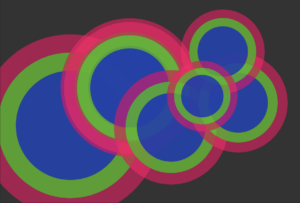

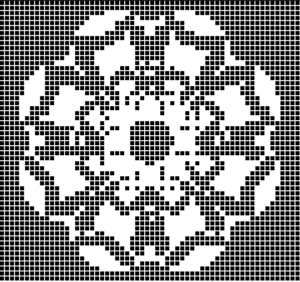

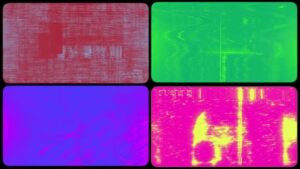

I thought this was a neat idea, and I decided to give another background option that users could manipulate via sliders. Xiaozao gave an idea of having one of the background options being a coded background, so from this idea I developed a code of pixelated sunset in which users could control the colors of the sunset using sliders. Here’s the sketch I’ve created as a demo using this sample image:

However, when I implemented this code into my sketch, it didn’t exactly give an outlook that I wanted; I also noticed that the sketch was running slower, which I wanted to avoid. At this point, my sketch was like this:

I still wanted to give two options for the backdrop, but instead of coding the background, I decided to give two image backdrop options instead. The idea of having the second backdrop as a modern landscape of Korea came unexpectedly, and I thought this would be a fun comparison to draw! Therefore, I created a toggle button that could switch the background image at mouse click, and added another landscape image from this link. After adjusting the toggle button to the bottom right corner, I had this sketch:

- Idea i had: when mouse is pressed, flowers keep being generated

For this, I added a new function called mouseDragged(), which made it so that once the mouse is pressed and dragged across the canvas, it continuously generates multiple flowers, as shown in the image below.

- Adjust the particles (their positions, number, repelling distance, etc.)

For this, I adjusted the “flee(target)” aspect, the “update()” aspect inside the function particle, as well as “function initializeMainCode()” to 1) increase the number of particles, 2) limit the particles to the upper half of the canvas, and 3) make the repelling of the particles more clear.

- SVG file export –> Screenshot feature

Lukrecija suggested this idea, and I thought this was so clever. However, I thought adding a screenshot feature might be easier than SVG file export feature, because I remember the SVG feature making my sketch lag a lot last time during my midterm project.

For this, I also created a button named “Take Screenshot,” and added the following code snippet after declaring the screenshotButton as a variable and adding the button in the function initializeMainCode():

function takeScreenshot() {

// Save the current canvas as an image

saveCanvas('screenshot', 'png');

}

Once I had the finalized sketch, I just adjusted it to go into full screen mode, and I was good to go!

Final Sketch:

Implementation:

The interactive aspects of this sketch are the following:

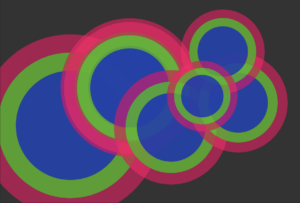

- Particles — used steering, fleeing, wandering, etc.; the user can hover the mouse nearby the particles, and they will see that particles are repelling the mouse.

Code snippet:

flee(target) {

let desired = p5.Vector.sub(this.position, target);

let d = desired.mag();

if (d < 150) {

// Normalize the desired vector

desired.normalize();

// Set a fixed magnitude for consistent repelling force

let repelMagnitude = 10; // Adjust the repelling force as needed

// Scale the vector to the fixed magnitude

desired.mult(repelMagnitude);

let steer = p5.Vector.sub(desired, this.velocity);

steer.limit(this.maxSpeed);

this.applyForce(steer);

}

}

This is the fleeing behavior that I added in the class Particle, and this was the main feature that I kept changing in the particle class in order to get the desired speed and distance of the particles’ repelling behavior.

- Flowers — flowers will grow on canvas per mouse click, and there’s two options for the users: 1) one single mouse click — one flower generated, 2) keeping the mouse pressed on the canvas — multiple flowers continuing to be generated.

Code snippet:

function mousePressed() {

if (currentPatternIndex === 0) {

let flower = new KoreanFlower(mouseX, mouseY);

flowers.push(flower);

patterns.push(flower);

} else if (currentPatternIndex === 1) {

let tree = new FractalTree(mouseX, mouseY);

patterns.push(tree);

// Generate additional flowers around the tree

for (let i = 0; i < 10; i++) {

let flower = new KoreanFlower(tree.x + random(-50, 50), tree.y + random(-50, 50));

flowers.push(flower);

patterns.push(flower);

}

}

}

The function mousePressed() generates one flower per mouse click.

function mouseDragged() {

// Check if the mouse is continuously pressed

if (mouseIsPressed) {

// Generate a flower at the current mouse position

if (currentPatternIndex === 0) {

let flower = new KoreanFlower(mouseX, mouseY);

flowers.push(flower);

patterns.push(flower);

}

}

}

The function mouseDragged() generates multiple flowers as you drag the mouse on the canvas while it’s pressed.

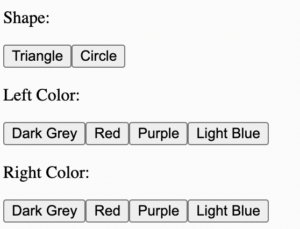

- Background — users can choose between gradient background/image background, which they can choose by using buttons below the canvas; they can also adjust the colors of the gradient using sliders.

Code snippet:

let backgroundImage1;

let backgroundImage2;

let currentBackgroundImage;

I first declared both images as a variable, and I also set the first background image (the traditional painting) as the initial background image.

function toggleBackground() {

// Toggle between the two background images

if (currentBackgroundImage === backgroundImage1) {

currentBackgroundImage = backgroundImage2;

} else {

currentBackgroundImage = backgroundImage1;

}

}

A key feature of the background images was the toggleBackground function, which was a new feature I tried implementing in my code! This allowed me to go back and forth between the two backdrops with least difficulty as possible.

- Audio — users can use the keyboard keys A, B, C, D, and E to mix and play the different audio files as they wish, thus generate a music of their own.

Code snippet:

// declare audio files

let A;

let B;

let C;

let D;

let E;

...

function preload() {

...

// Load audio files

A = loadSound('A.MP3');

B = loadSound('B.MP3');

C = loadSound('C.MP3');

D = loadSound('D.MP3');

E = loadSound('E.MP3');

}

I first uploaded, called, and declared them.

// for audio files

function keyPressed() {

// Check if the key corresponds to a loaded audio file

if (key === 'A') {

// Play or stop the audio file based on its current state

if (A.isPlaying()) {

A.stop();

} else {

A.play();

}

} else if (key === 'B') {

if (B.isPlaying()) {

B.stop();

} else {

B.play();

}

} else if (key === 'C') {

// Play or stop the audio file associated with the 'C' key

if (C.isPlaying()) {

C.stop();

} else {

C.play();

}

} else if (key === 'D') {

// Play or stop the audio file associated with the 'D' key

if (D.isPlaying()) {

D.stop();

} else {

D.play();

}

} else if (key === 'E') {

// Play or stop the audio file associated with the 'E' key

if (E.isPlaying()) {

E.stop();

} else {

E.play();

}

}

}

Then, I created a function keyPressed() specially for the audio files, where I linked each key to an audio file and established the playing and stopping functions on first and second click, respectively.

Links Used:

I watched a few tutorials such as the following (video1, video2, video3) for various parts of my sketch, whether it be the gradient background, creating a fractal tree, or implementing audio into my sketch. All the other images/audios I’ve used were linked either in the previous post or in the other parts of this post.

Parts I’m Proud Of:

Honestly, I’m very proud of the entire sketch without a particular part that I’m especially fond of, for I think it’s very packed with different details, options, and functions because I hoped to provide as many variations for the users as possible so that they can have as much creative freedom as they wish. I still think I’m the most proud of successfully having both the visual sketch and the audio music be manipulated by the user using methods such as sliders, keyboard, and the mouse, making the entire sketch be an embodiment of interactivity.

I also had many new features I tried out, such as toggle background and take screenshot functions. I was also proud of running them smoothly in my sketch as well!

Challenges:

Something I struggled with for a long time was loading the audio files and having them play, because I couldn’t figure out why the sketch kept having error messages for a long time; it turned out that it was because I didn’t capitalize the “.mp3” part of the audio file names when I wrote it in the code. It was one of those “ah-ha” moments with p5.js and being extra cautious with the details, haha.

function preload() {

// Load audio files

A = loadSound('A.MP3');

B = loadSound('B.MP3');

C = loadSound('C.MP3');

D = loadSound('D.MP3');

E = loadSound('E.MP3');

}

I also struggled with implementing a coded pixelated sunset background, for I had many different difficulties every time I tried a new method; for example, the canvas was recognizing the gradient and original background buttons as just simply mouse pressing against the sketch, which was triggering the multiple generations of flowers instead of having the buttons be pressed and switch the background.

User Interaction Videos from the IM Showcase:

Today’s showcase was a success! It was really rewarding to see people enjoying the interaction and appreciating my work.

Here are a few videos:

IMG_2671 IMG_2673

As well as photos:

Future Improvements:

For the future, I’d like to code multiple fractal trees so that it’ll be like a forest of fractal trees altogether forming a landscape of its own.

Another feature I’d like to try out is associating different audio files depending on the backdrop so that the audio fits the time period or the atmosphere of the image better; for example, for my modern landscape, I’d like to add K-pop tracks or more modern instruments such as guitar, piano, etc.

Overall, I felt like I could really showcase all the lessons and skills I’ve learned during this class this semester into this final project, and I’m very satisfied with my work!

.

.

I tried various approaches to mitigate this:

I tried various approaches to mitigate this: