Concept and Idea

The main idea behind my midterm project was to create a generative landscape that evolves over time. This would allow users to interact with the scene via a slider and explore the beauty of dynamic elements like a growing tree, shifting daylight, and a clean, natural environment. I wanted to focus on simple yet beautiful visual aesthetics while ensuring interactivity and real-time manipulation, aiming for a calm user experience.

Code Development and Functionality

Time Slider: The time slider dynamically adjusts the time of day, transitioning from morning to night. The background color shifts gradually, and the sun and moon rise and fall in sync with the time value.

Main Tree Rendering: The project features a main tree at the center of the canvas. The tree grows gradually, with branches and leaves adjusting based on predefined patterns to give it a natural look. I worked hard to make sure that the tree’s behavior felt organic.

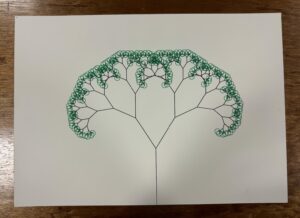

SVG Export: One of the key functionalities of this project is the SVG Export feature, which allows users to save a snapshot of the generated landscape in high-quality vector format. This export option enables users to preserve the art they create during the interaction, offering a way to take a piece of the generative landscape.

Code Snippets Explanation:

Background Color

function updateBackground(timeValue, renderer = this) {

let sunriseColor = color(255, 102, 51);

let sunsetColor = color(30, 144, 255);

let nightColor = color(25, 25, 112);

let transitionColor = lerpColor(sunriseColor, sunsetColor, timeValue / 100);

if (timeValue > 50) {

transitionColor = lerpColor(sunsetColor, nightColor, (timeValue - 50) / 50);

c2 = lerpColor(color(255, 127, 80), nightColor, (timeValue - 50) / 50);

} else {

c2 = color(255, 127, 80);

}

setGradient(0, 0, W, H, transitionColor, c2, Y_AXIS, renderer);

}

This part of the code gradually shifts the background color from sunrise to sunset and into night, giving the entire scene a fluid sense of time passing. It was highly rewarding to see the colors change with smooth transitions based on user input.

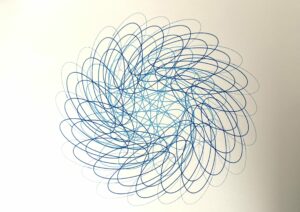

Tree Shape Rendering

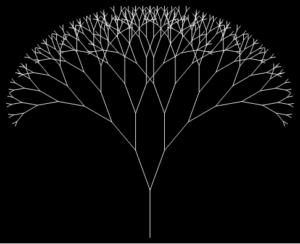

At the heart of this project is the main tree, which dynamically grows and changes shape as part of the landscape. The goal is to have the tree shift in both its shape and direction each time it is rendered, adding an element of unpredictability and natural randomness. The tree is designed to grow recursively, with branches and leaves adjusting their position and angles in a way that mimics the organic growth patterns found in nature.

function drawTree(depth, renderer = this) {

renderer.stroke(139, 69, 19);

renderer.strokeWeight(3 - depth); // Adjust stroke weight for consistency

branch(depth, renderer); // Call the branch function to draw the tree

}

function branch(depth, renderer = this) {

if (depth < 10) {

renderer.line(0, 0, 0, -H / 15);

renderer.translate(0, -H / 15);

renderer.rotate(random(-0.05, 0.05));

if (random(1.0) < 0.7) {

renderer.rotate(0.3);

renderer.scale(0.8);

renderer.push();

branch(depth + 1, renderer); // Recursively draw branches

renderer.pop();

renderer.rotate(-0.6);

renderer.push();

branch(depth + 1, renderer);

renderer.pop();

} else {

branch(depth, renderer);

}

} else {

drawLeaf(renderer); // Draw leaves when the branch reaches its end

}

}

Currently, the tree renders with the same basic structure every time the canvas is started. The recursive branch() function ensures that the tree grows symmetrically, with each branch extending and splitting at controlled intervals. The randomness in the rotation(rotate()) creates slight variations in the angles of the branches, but overall the tree maintains a consistent shape and direction.

This stable and predictable behavior is useful for ensuring that the tree grows in a visually balanced way, without unexpected distortions or shapes. The slight randomness in the angles gives it a natural feel, but the tree maintains its overall form each time the canvas is refreshed.

This part of the project focuses on the visual consistency of the tree, which helps maintain the aesthetic of the landscape. While the tree doesn’t yet shift in shape or direction with every render, the current design showcases the potential for more complex growth patterns in the future.

Challenges

Throughout the development of this project, several challenges arose, particularly regarding the tree shadow, sky color transitions, tree shape, and ensuring the SVG export worked correctly. While I’ve made significant progress, overcoming these obstacles required a lot of experimentation and adjustment to ensure everything worked together harmoniously.

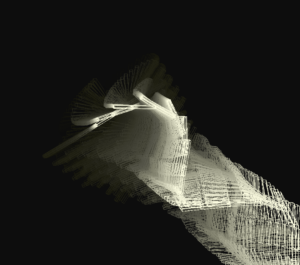

1. Tree Shadow Rendering One of the key challenges was handling the tree shadow. I wanted the shadow to appear on the canvas in a way that realistically reflects the position of the sun or moon. However, creating a shadow that behaves naturally while keeping the tree itself visually consistent was tricky. The biggest challenge came when trying to manage the transformations (translate()) and rotate()) needed to properly position the shadow, while ensuring that it didn’t overlap awkwardly with the tree or its branches.

I was also careful to ensure the shadow was neglected in the SVG export, as shadows often don’t look as polished in vector format. Balancing these two render modes was a challenge, but I’m happy with the final result where the shadow appears correctly on the canvas but is removed when saved as an SVG.

2. Sky Color Transitions Another challenge was smoothly transitioning the sky color based on the time of day, controlled by the slider. Initially, it was difficult to ensure the gradient between sunrise, sunset, and nighttime felt natural and visually appealing. The subtleness required in blending colors across the gradient presented some challenges in maintaining smooth transitions without sudden jumps which happened way more then I needed it to.

Using the lerpColor() function to blend the sky colors as the slider changes allowed me to create a more cohesive visual experience. Finding the right balance between the colors and timing took a lot of trial and error. Ensuring this transition felt smooth was critical to the overall atmosphere of the scene.

3. SVG File Export One of the more technical challenges was ensuring that the SVG export functionality worked seamlessly, capturing the landscape in vector format without losing the integrity of the design. Exporting the tree and sky while excluding the shadow required careful handling of the different renderers used for canvas and SVG. The transformations that worked for the canvas didn’t always translate perfectly to the SVG format, causing elements to shift out of place or scale incorrectly.

Additionally, I needed to ensure that the tree was positioned correctly in the SVG file, especially since the translate() function works differently in SVG. Ensuring that all elements appeared in their proper positions while maintaining the overall aesthetic of the canvas version was a delicate process.

4. Switching Between SVG Rendering and Canvas with the full explanation

In the project, switching between SVG rendering and canvas rendering is essential to ensure the artwork can be viewed in real-time on the canvas and saved as a high-quality SVG file. These two rendering contexts behave differently, so specific functions must handle the drawing process correctly in each mode.

Overview of the Switch

- Canvas Rendering: This is the default rendering context where everything is drawn in real-time on the web page. The user interacts with the canvas, and all elements (like the tree, sky, and shadows) are displayed dynamically.

- SVG Rendering: This mode is activated when the user wants to save the artwork as a vector file (SVG). Unlike the canvas, SVG is a scalable format, so certain features (such as shadows) need to be omitted to maintain a clean output. SVG rendering requires switching to a special rendering context using createGraphics(W, H, SVG).

Code Implementation for the Switch

The following code shows how the switch between canvas rendering and SVG rendering is handled:

// Function to save the canvas as an SVG without shadow

function saveCanvasAsSVG() {

let svgCanvas = createGraphics(W, H, SVG); // Use createGraphics for SVG rendering

redrawCanvas(svgCanvas); // Redraw everything onto the SVG canvas

save(svgCanvas, "myLandscape.svg"); // Save the rendered SVG

svgCanvas.remove(); // Remove the SVG renderer to free memory

}

Here’s how the switching process works

- Creates an SVG Graphics Context: When saving the artwork as an SVG, we create a separate graphics context using createGraphics(W, H, SVG). This context behaves like a normal p5.js canvas, but it renders everything as an SVG instead of raster graphics. The dimensions of the SVG are the same as the canvas (W and H)

- Redraws Everything on the SVG: After creating the SVG context, we call the redrawCanvas(svgCanvas) function to redraw the entire scene but on the SVG renderer. This ensures that everything (like the tree and background) is rendered as part of the vector file, but without including elements like shadows, which may not look good in an SVG.

- Save the SVG: Once everything has been drawn on the svgCanvas, the save() This function saves the SVG file locally on the user’s device. This ensures that the entire artwork is captured as a scalable vector file, preserving all the details for further use.

- Remove the SVG Renderer: After saving the SVG, we call svgCanvas.remove() to clean up the memory and remove the SVG renderer. This is essential to avoid keeping the unused graphics context in memory once the file has been saved.

Redrawing the Canvas and SVG Separately

The key part of this process is in the redrawCanvas() function, which determines whether the elements are drawn on the canvas or the SVG renderer:

function redrawCanvas(renderer = this) {

if (renderer === this) {

background(135, 206, 235); // For the normal canvas

} else {

renderer.background(135, 206, 235); // For the SVG canvas

}

let timeValue = timeSlider.value(); // Get the slider value for background time changes

updateBackground(timeValue, renderer);

updateSunAndMoon(timeValue, renderer);

// Draw tree and other elements

if (renderer === this) {

// Draw the main tree on the canvas with shadow

push();

translate(W / 2, H - 50);

randomSeed(treeShapeSeed);

drawTree(0, renderer);

pop();

// Draw shadow only on the canvas

let shadowDirection = sunX ? sunX : moonX;

let shadowAngle = map(shadowDirection, 0, width, -PI / 4, PI / 4);

push();

translate(W / 2, H - 50);

rotate(shadowAngle);

scale(0.5, -1.5); // Flip and adjust shadow scale

drawTreeShadow(0, renderer);

pop();

} else {

// Draw the main tree in SVG without shadow

renderer.push();

renderer.translate(W / 2, H - 50); // Translate for SVG

randomSeed(treeShapeSeed);

drawTree(0, renderer);

renderer.pop();

}

}

- Check the Renderer: The redrawCanvas(renderer = this) function takes in a renderer argument, which defaults to this (the main canvas). However, when the function is called for SVG rendering, the renderer becomes the svgCanvas.

- Background Handling: The background is drawn differently depending on the renderer. For the canvas, the background is rendered as a normal raster graphic (background(135, 206, 235), but for SVG rendering, it uses renderer.background(), which applies the background color to the vector graphic.

- Tree Rendering: The drawTree() function is called for both canvas and SVG rendering. However, in the SVG mode, the shadow is omitted to produce a cleaner vector output. This is handled by using conditional checks (if (renderer === this) to ensure that the shadow is only drawn when rendering on the canvas.

- Shadow Omission in SVG: To maintain a clean SVG output, shadows are only drawn in the canvas rendering mode. The drawTreeShadow() function is conditionally skipped in the SVG renderer to prevent unnecessary visual clutter in the vector file.

Why the Switch is Necessary

Switching between canvas rendering and SVG rendering is crucial for several reasons:

- Canvas: Provides real-time, interactive feedback as the user adjusts the scene (e.g., changing the time of day via a slider). Shadows and other elements are rendered in real-time to enhance the user experience.

- SVG: This is a high-quality, scalable vector output. SVGs are resolution-independent, so they retain detail regardless of size. However, certain elements like shadows might not translate well to the SVG format, so these are omitted during the SVG rendering process.

This approach allows the project to function interactively on the canvas while still allowing users to export their creations in a high-quality format constantly.

Full Code

let W = 650;

let H = 450;

let timeSlider;

let saveButton;

let showMountains = false;

let showTrees = false;

let treeShapeSeed = 0;

let mountainShapeSeed = 0;

let sunX;

let sunY;

let moonX;

let moonY;

let mountainLayers = 2;

let treeCount = 8;

const Y_AXIS = 1;

let groundLevel = H - 50;

function setup() {

createCanvas(W, H);

background(135, 206, 235);

timeSlider = createSlider(0, 100, 50);

timeSlider.position(200, 460);

timeSlider.size(250);

timeSlider.input(updateCanvasWithSlider); // Trigger update when the slider moves

saveButton = createButton('Save as SVG');

saveButton.position(550, 460);

saveButton.mousePressed(saveCanvasAsSVG);

noLoop(); // Only redraw on interaction

redrawCanvas(); // Initial drawing

}

// Update canvas when the slider changes

function updateCanvasWithSlider() {

redrawCanvas(); // Call redrawCanvas to apply slider changes

}

// Function to save the canvas as an SVG without shadow

function saveCanvasAsSVG() {

let svgCanvas = createGraphics(W, H, SVG); // Use createGraphics for SVG rendering

redrawCanvas(svgCanvas); // Redraw everything onto the SVG canvas

save(svgCanvas, "myLandscape.svg");

svgCanvas.remove();

}

// Function to redraw the canvas content on a specific renderer (SVG or regular canvas)

function redrawCanvas(renderer = this) {

if (renderer === this) {

background(135, 206, 235); // For the normal canvas

} else {

renderer.background(135, 206, 235); // For the SVG canvas

}

let timeValue = timeSlider.value(); // Get the slider value for background time changes

updateBackground(timeValue, renderer);

updateSunAndMoon(timeValue, renderer);

drawSimpleGreenGround(renderer);

// Handle the main tree drawing separately for canvas and SVG

if (renderer === this) {

// Draw the main tree on the canvas

push();

translate(W / 2, H - 50); // Translate for canvas

randomSeed(treeShapeSeed);

drawTree(0, renderer);

pop();

// Draw shadow on the main canvas

let shadowDirection = sunX ? sunX : moonX;

let shadowAngle = map(shadowDirection, 0, width, -PI / 4, PI / 4);

push();

translate(W / 2, H - 50); // Same translation as the tree

rotate(shadowAngle); // Rotate based on light direction

scale(0.5, -1.5); // Scale and flip for shadow effect

drawTreeShadow(0, renderer);

pop();

} else {

// Draw the main tree in SVG without shadow

renderer.push();

renderer.translate(W / 2, H - 50); // Translate for SVG

randomSeed(treeShapeSeed);

drawTree(0, renderer);

renderer.pop();

}

}

// Commented out the tree shadow (kept for the main canvas)

function drawTreeShadow(depth, renderer = this) {

renderer.stroke(0, 0, 0, 80); // Semi-transparent shadow

renderer.strokeWeight(5 - depth); // Adjust shadow thickness

branch(depth, renderer); // Use the branch function to draw the shadow

}

// Update background colors based on time

function updateBackground(timeValue, renderer = this) {

let sunriseColor = color(255, 102, 51);

let sunsetColor = color(30, 144, 255);

let nightColor = color(25, 25, 112);

let transitionColor = lerpColor(sunriseColor, sunsetColor, timeValue / 100);

if (timeValue > 50) {

transitionColor = lerpColor(sunsetColor, nightColor, (timeValue - 50) / 50);

c2 = lerpColor(color(255, 127, 80), nightColor, (timeValue - 50) / 50);

} else {

c2 = color(255, 127, 80);

}

setGradient(0, 0, W, H, transitionColor, c2, Y_AXIS, renderer);

}

// Update sun and moon positions

function updateSunAndMoon(timeValue, renderer = this) {

if (timeValue <= 50) {

sunX = map(timeValue, 0, 50, -50, width + 50);

sunY = height * 0.8 - sin(map(sunX, -50, width + 50, 0, PI)) * height * 0.5;

renderer.noStroke();

renderer.fill(255, 200, 0);

renderer.ellipse(sunX, sunY, 70, 70);

}

if (timeValue > 50) {

moonX = map(timeValue, 50, 100, -50, width + 50);

moonY = height * 0.8 - sin(map(moonX, -50, width + 50, 0, PI)) * height * 0.5;

renderer.noStroke();

renderer.fill(200);

renderer.ellipse(moonX, moonY, 60, 60);

}

}

// Create a gradient effect for background

function setGradient(x, y, w, h, c1, c2, axis, renderer = this) {

renderer.noFill();

if (axis === Y_AXIS) {

for (let i = y; i <= y + h; i++) {

let inter = map(i, y, y + h, 0, 1);

let c = lerpColor(c1, c2, inter);

renderer.stroke(c);

renderer.line(x, i, x + w, i);

}

}

}

// Draw the green ground at the bottom

function drawSimpleGreenGround(renderer = this) {

renderer.fill(34, 139, 34);

renderer.rect(0, H - 50, W, 50);

}

// Draw the main tree

function drawTree(depth, renderer = this) {

renderer.stroke(139, 69, 19);

renderer.strokeWeight(3 - depth); // Adjust stroke weight for consistency

branch(depth, renderer);

}

// Draw tree branches

function branch(depth, renderer = this) {

if (depth < 10) {

renderer.line(0, 0, 0, -H / 15);

renderer.translate(0, -H / 15);

renderer.rotate(random(-0.05, 0.05));

if (random(1.0) < 0.7) {

renderer.rotate(0.3);

renderer.scale(0.8);

renderer.push();

branch(depth + 1, renderer);

renderer.pop();

renderer.rotate(-0.6);

renderer.push();

branch(depth + 1, renderer);

renderer.pop();

} else {

branch(depth, renderer);

}

} else {

drawLeaf(renderer);

}

}

// Draw leaves on branches

function drawLeaf(renderer = this) {

renderer.fill(34, 139, 34);

renderer.noStroke();

for (let i = 0; i < random(3, 6); i++) {

renderer.ellipse(random(-10, 10), random(-10, 10), 12, 24); // Increase leaf size

}

}

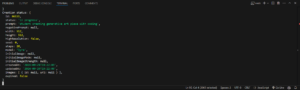

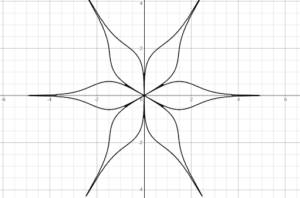

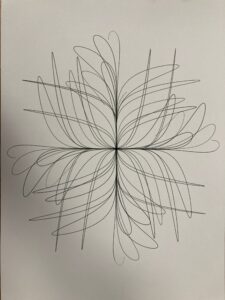

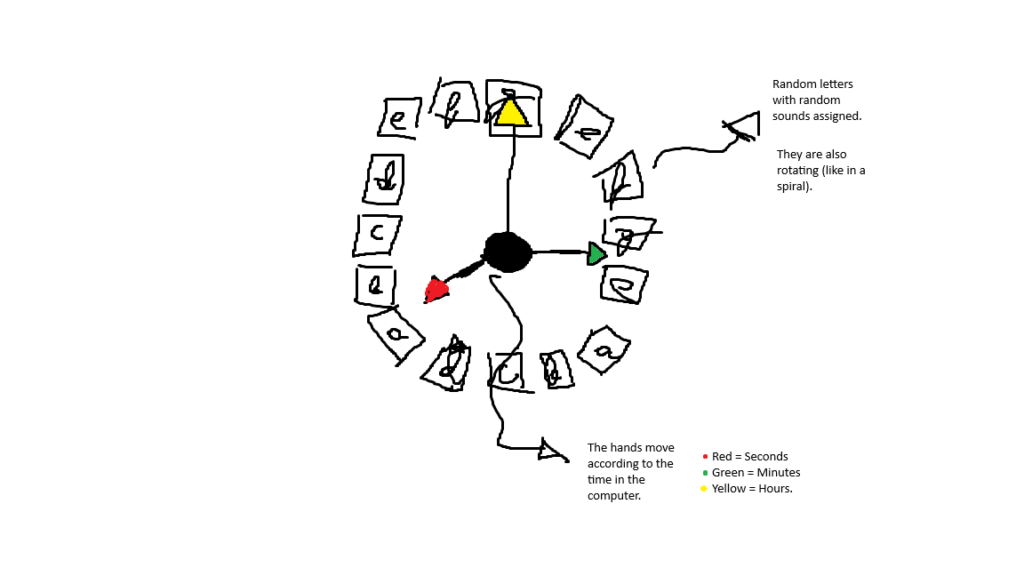

Sketch

Future Improvements

As the project continues to evolve, several exciting features are planned that will enhance the visual complexity and interactivity of the landscape. These improvements aim to add depth, variety, and richer user engagement, building upon the current foundation.

1. Mountain Layers

A future goal is to introduce mountain layers into the landscape’s background. These mountains will be procedurally generated and layered to create a sense of depth and distance. Users will be able to toggle different layers, making the landscape more immersive. By adding this feature, the project will feel more dynamic, with natural textures and elevation changes in the backdrop.

The challenge will be to ensure these mountain layers integrate smoothly with the existing elements while maintaining a clean, balanced visual aesthetic.

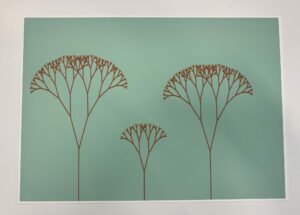

2. Adding Background Trees

In future versions, I plan to implement background trees scattered across the canvas. These trees will vary in size and shape, adding diversity to the forest scene. By incorporating multiple trees of different types, the landscape will feel fuller and more like a natural environment.

The goal is to introduce more organic elements while ensuring that the visual focus remains on the main tree in the center of the canvas.

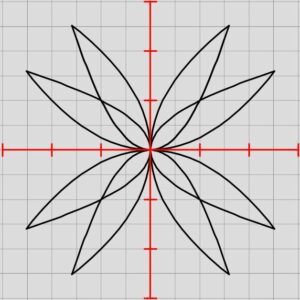

3. Shifting Tree Shape

Another key feature in development is the tree’s ability to shift shape and direction dynamically in a random pattern. In the future, the tree’s branches will grow differently each time the canvas is refreshed, making each render unique. This will add a level of unpredictability and realism to the scene, allowing the tree to behave more like its real-life counterpart, which never grows the same way twice.

Careful tuning will be required to ensure the tree maintains its natural appearance while introducing variations that feel organic.

4. Enhanced Interactivity

I also aim to expand the project’s interactive elements. Beyond the current time slider, future improvements will allow users to manipulate other aspects of the landscape, such as the number of trees, the height of the mountains, or even the size and shape of the main tree. This will allow users to have a greater impact on the generative art they create, deepening their connection with the landscape.

Sources:

https://p5js.org/reference/p5/createGraphics/

https://github.com/zenozeng/p5.js-svg

https://www.w3.org/Graphics/SVG/

https://github.com/processing/p5.js/wiki/

Coding Challenge #14: Fractal Trees – Recursive

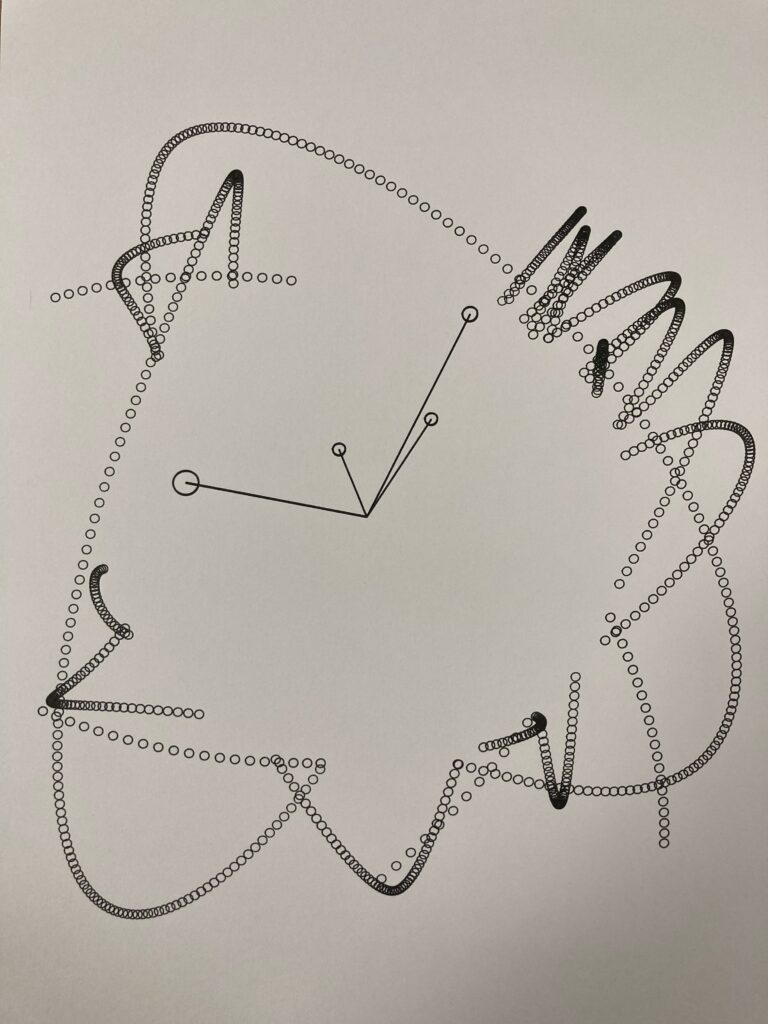

Second Version:

Second Version:

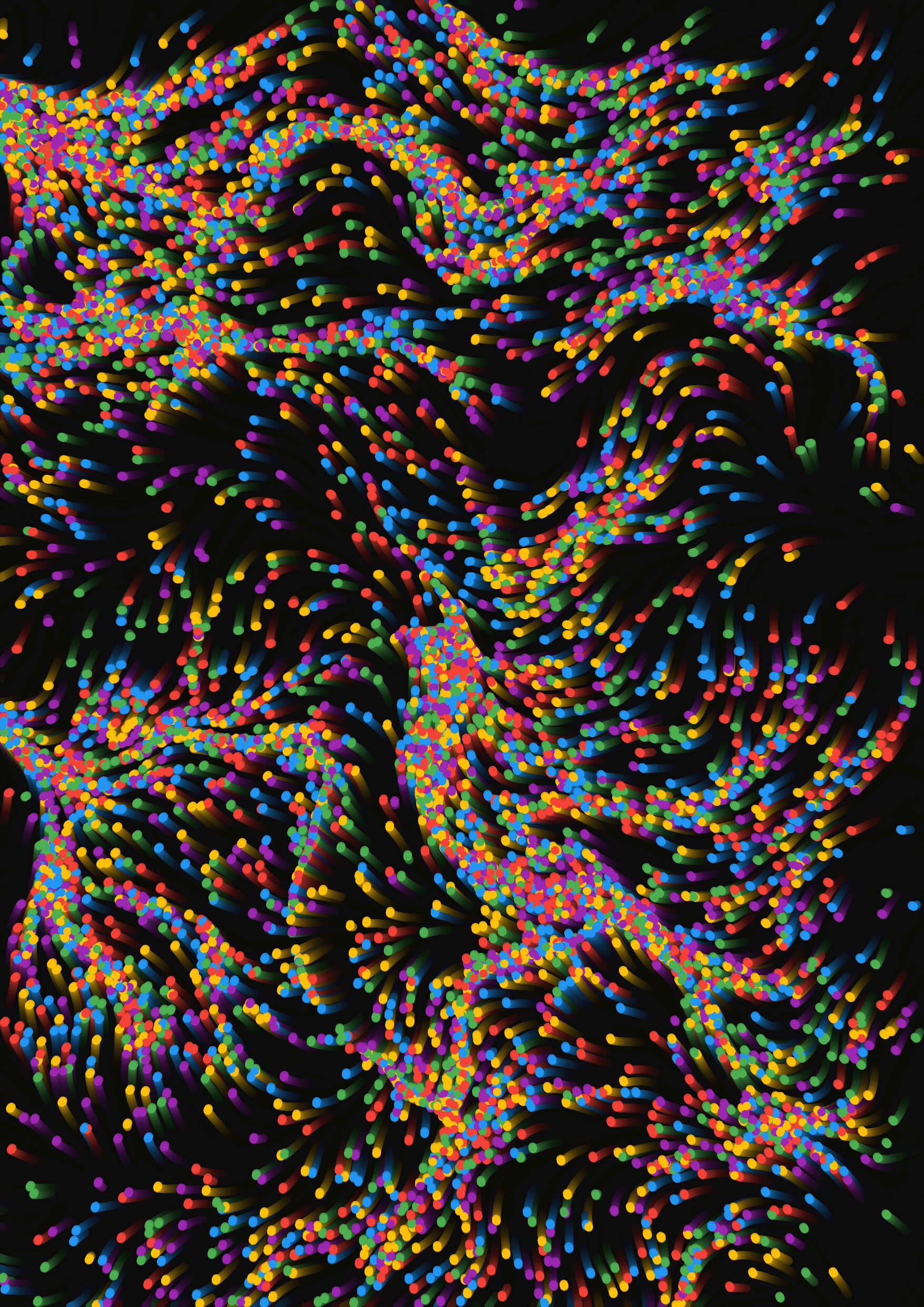

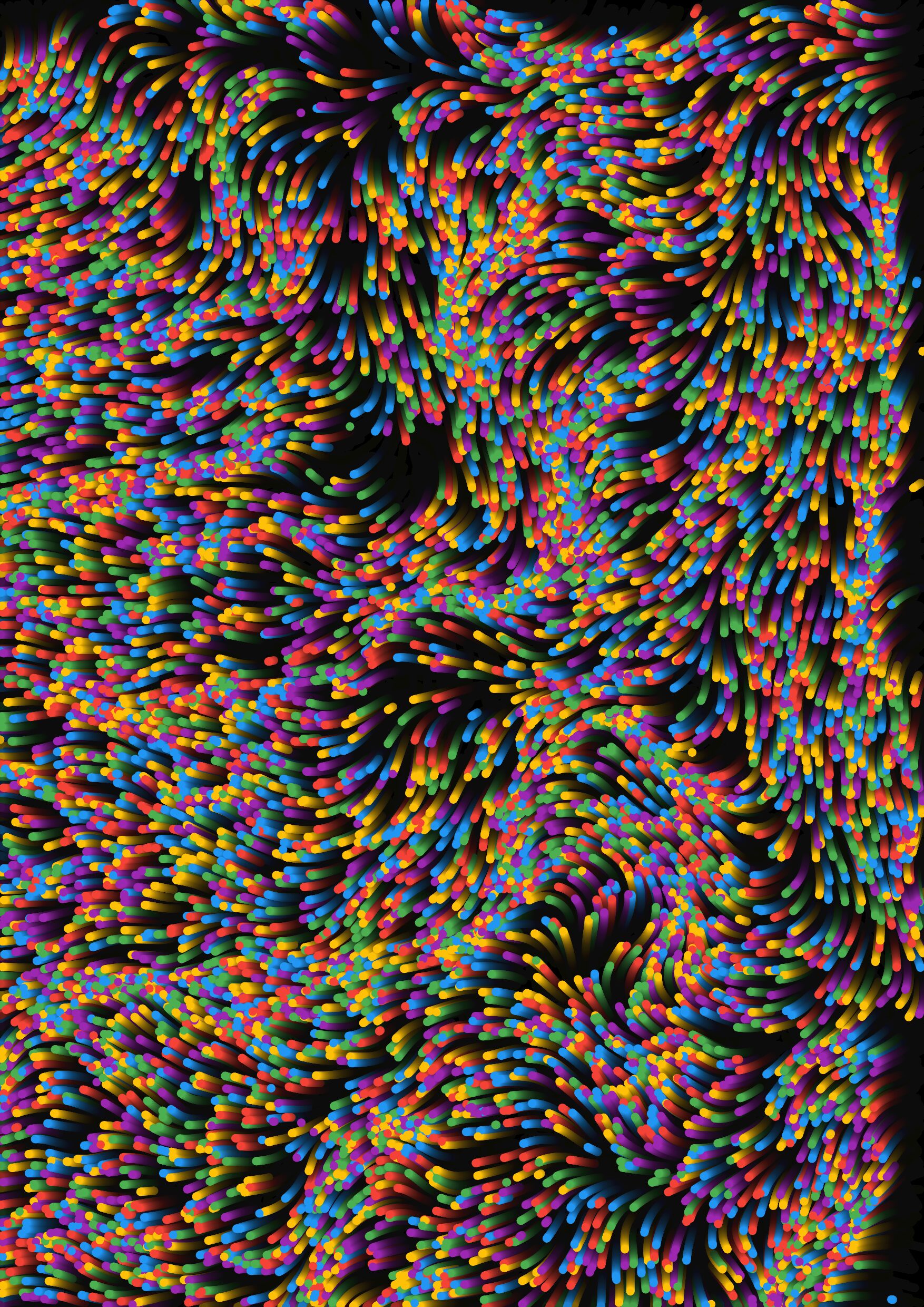

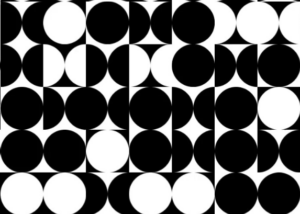

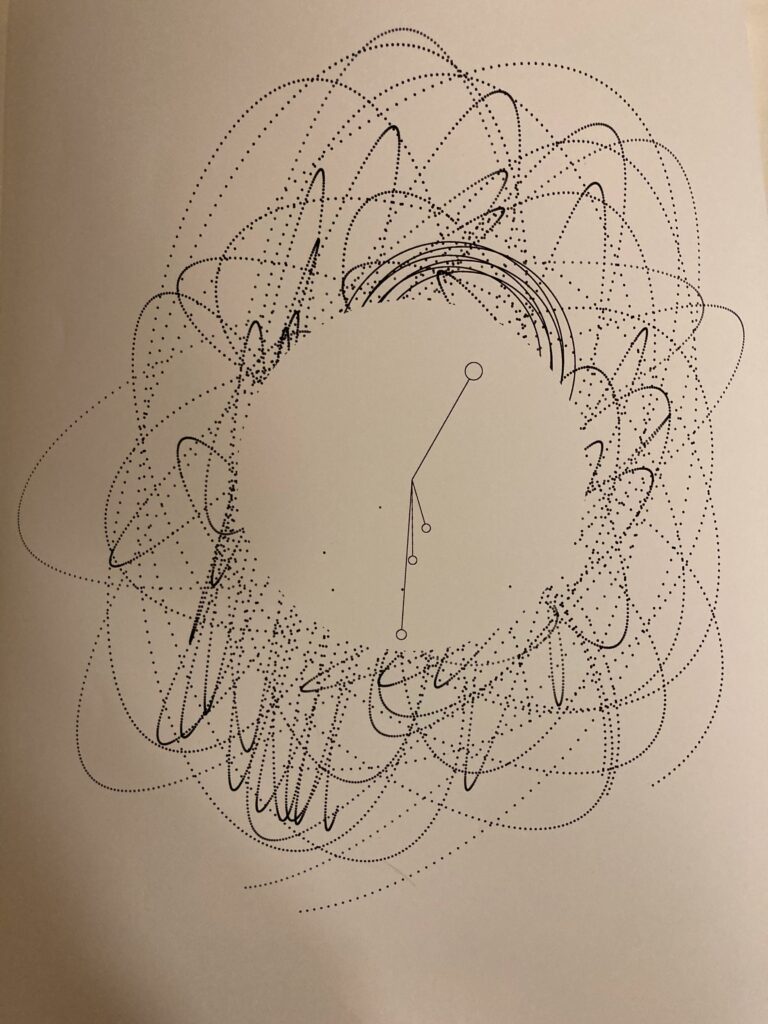

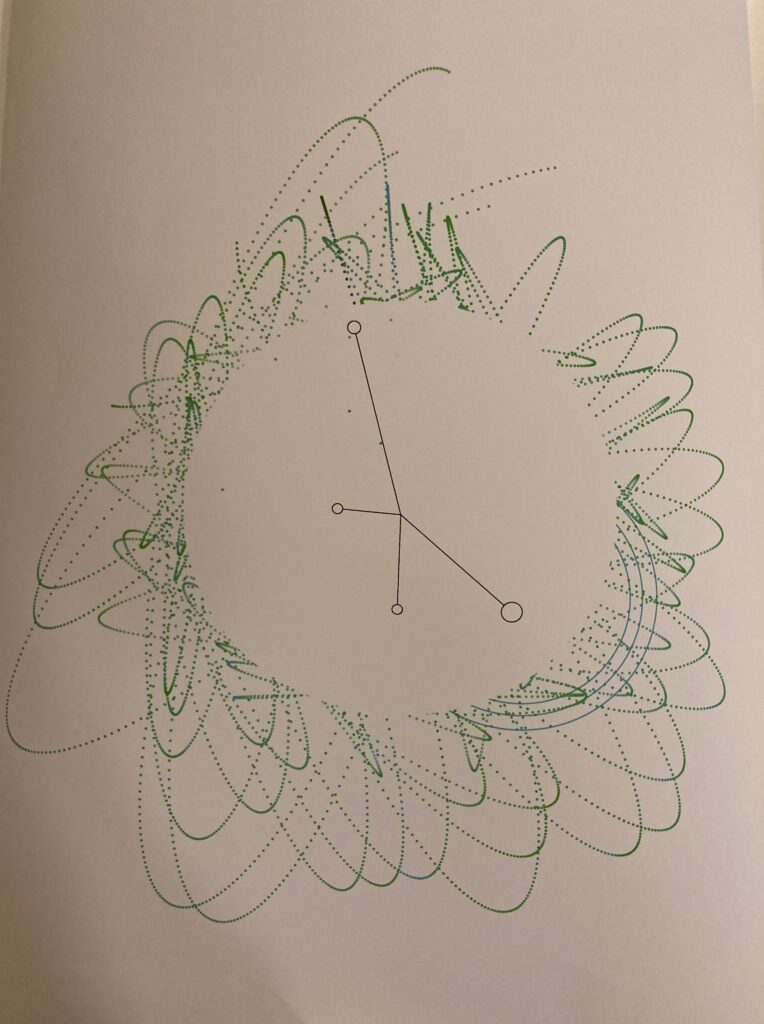

The Akan tribe, part of the larger Ashanti (or Asante) group, encapsulates a rich cultural heritage that emphasizes the importance of history and self-identity. The word “Sankofa” translates to “to retrieve,” embodying the proverb, “Se wo were fi na wosankofa a yenkyi,” meaning “it is not taboo to go back and get what you forgot.” This principle highlights that understanding our history is crucial for personal growth and cultural awareness.

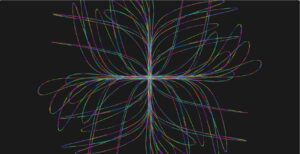

The Akan tribe, part of the larger Ashanti (or Asante) group, encapsulates a rich cultural heritage that emphasizes the importance of history and self-identity. The word “Sankofa” translates to “to retrieve,” embodying the proverb, “Se wo were fi na wosankofa a yenkyi,” meaning “it is not taboo to go back and get what you forgot.” This principle highlights that understanding our history is crucial for personal growth and cultural awareness. This philosophy has greatly inspired my project. Adinkra symbols, with their deep historical roots and intricate patterns, serve as a central element of my work. These symbols carry meanings that far surpass my personal experiences, urging me to look back at my heritage.

This philosophy has greatly inspired my project. Adinkra symbols, with their deep historical roots and intricate patterns, serve as a central element of my work. These symbols carry meanings that far surpass my personal experiences, urging me to look back at my heritage.  I aim to recreate these age-old symbols in a modern, interactive format that pays homage to their origins. It’s my way of going back into the past to get what is good and moving forward with it.

I aim to recreate these age-old symbols in a modern, interactive format that pays homage to their origins. It’s my way of going back into the past to get what is good and moving forward with it.