Concept

In my final project, I delve into the mysterious realm of hypnagogic and hypnopompic hallucinations – vivid, dream-like experiences that occur during the transition between wakefulness and sleep. These phenomena, emerging spontaneously from the brain without external stimuli, have long fascinated me. They raise profound questions about the brain’s capacity to generate alternate states of consciousness filled with surreal visuals.

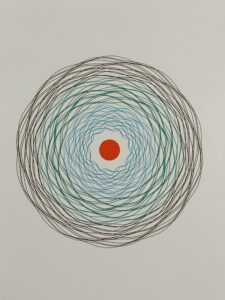

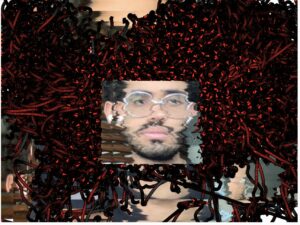

My interest in these hallucinations, particularly their elusive nature and the lack of complete understanding about their causes, inspired me to create an interactive art piece.I chose this topic due to my fascination with these mind-states and the capacity of our brains to generate alternate states of consciousness with surreal visuals and experiences. I drew inspiration from Casey REAS, an artist known for his generative artworks. Below is some examples of his work named “Untitled Film Stills, Series 5“, showcasing facial distortions and dream-like states.

.

.

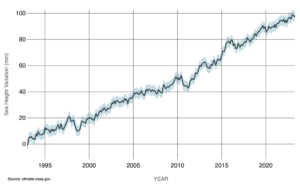

Experts are still exploring what exactly triggers these hallucinations. As noted by the Sleep Foundation, “Visual hypnagogic hallucinations often involve moving shapes, colors, and images, similar to looking into a kaleidoscope,” an effect I aimed to replicate with the dynamic movement of boids in my project. In addition, auditory hallucinations, as mentioned by VeryWellHealth, typically involve background noises, which I have tried to represent through the integration of sound corresponding to each intensity level in the project.

In conceptualizing and creating this project, I embraced certain qualities that may not conventionally align with typical aesthetic standards. The final outcome of the project might not appear as the most visually appealing or aesthetically organized work. However, this mirrors the inherent nature of hallucinations – they are often messy, unorganized, and disconnected. Hallucinations, especially those experienced at the edges of sleep, can be chaotic and disjointed, reflecting a mind that is transitioning between states of consciousness.

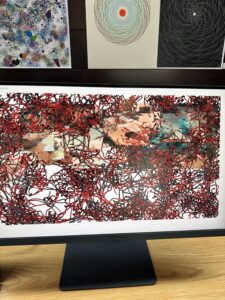

Images and User Testing

IM Showcase Documentation

Implementation Details and Code Snippets

At the onset, users are prompted to choose an intensity level, which dictates the pace and density of the visual elements that follow. Once the webcam is activated using ml5.js, the program isolates the user’s face, creating a distinct square around it. This face area is then subjected to pixel manipulation, achieving a melting effect that symbolizes the distortion characteristic of hallucinations.

Key features of the implementation include:

- Face Detection with ml5.js: Utilizing ml5.js’s FaceAPI, the sketch identifies the user’s face in real-time through the webcam.

faceapi = ml5.faceApi(video, options, modelReady); // initializes the Faceapi with the video element, options, and a callback function modelReady function modelReady() { faceapi.detect(gotFaces); //starts the face detection process } function gotFaces(error, results) { if (error) { console.error(error); return; } detections = results; // stores the face detection results in the detections variable faceapi.detect(gotFaces); // ensures continuous detection by recursion } - Distortion Effect:

- Pixelation Effect: The region of the user’s face, identified by the face detection coordinates, undergoes a pixelation process. Here, I average the colors of pixels within small blocks (about 10×10 pixels each) and then recolor these blocks with the average color. This technique results in a pixelated appearance, making the facial features more abstract.

- Melting Effect: To enhance the hallucinatory experience, I applied a melting effect to the pixels within the face area. This effect is achieved by shifting pixels downwards at varying speeds. I use Perlin noise to help create an organic, fluid motion, making the distortion seem natural and less uniform.

// in draw function // captures the area of the face detected by the ml5.js faceapi image(video.get(_x, _y, _width, _height), _x, _y, _width, _height); // apply pixelation and melting effects within the face area let face = get(_x, _y, _width, _height); face.loadPixels(); // for pixelation effect, this goes through the pixels of the isolated face area // creates pixelated effect by averaging colors in blocks of pixels for (let y = 0; y < face.height; y += 100) { for (let x = 0; x < face.width; x += 100) { let i = (x + y * face.width) * 4; let r = face.pixels[i + 0]; let g = face.pixels[i + 1]; let b = face.pixels[i + 2]; fill(r, g, b); noStroke(); } } // for melting effecr, this shifts horizontal lines of pixels by an offset determined by Perlin noise for (let y = 0; y < face.height; y++) { let offset = floor(noise(y * 0.1, millis() * 0.005) * 50); copy(face, 0, y, face.width, 1, _x + offset, _y + y, face.width, 1); }

- Boid and Flock Classes: The core of the dynamic flocking system lies in the creation and management of boid objects. Each boid is an autonomous agent which exhibits behaviors like separation, alignment, and cohesion.

In selecting the shape and movement of the boids, I chose a triangular form pointing in the direction of their movement. This design choice was done to evoke the unsettling feeling of a worm infestation, contributing to the overall creepy and surreal atmosphere of the project.show(col) { let angle = this.velocity.heading(); // to point in direction of motion fill(col); stroke(0); push(); translate(this.position.x, this.position.y); rotate(angle); beginShape(); // to draw triangle vertex(this.r * 2, 0); vertex(-this.r * 2, -this.r); vertex(-this.r * 2, this.r); endShape(CLOSE); pop(); } - Intensity Levels: By adjusting parameters like velocity, force magnitudes, and number of boids created, I varied the dynamics and sound suitable for each intensity level of the hallucination simulation. the below code shows the Medium settings for instance.

let btnMed = createButton('Medium'); btnMed.mousePressed(() => { maxBoids=150; // change numbe rof boids //ensures user selects only one level at a time btnLow.remove(); btnMed.remove(); btnHigh.remove(); initializeFlock(); // starts the flock system only after the user selects a level soundMedium.loop(); // plays sound });// in boids class // done for each intensity behaviorIntensity() { if (maxBoids === 150) { // Medium intensity this.maxspeed = 3; this.maxforce = 0.04; this.perceptionRadius = 75; } - Tracing: the boids, as a well as the user’s distorted face, leave a trace behind them as they move. This creates a haunting visual effect which contributes to the disturbing nature of the hallucinations. This effect is achieved by not including a traditional background function in the sketch. This design choice ensures that previous frame’s drawings are not cleared, allowing for a persistent visual trail that adds to the hallucinatory quality of the piece. I experimented with alternative methods to create a trailing effect, but found that slight, fading trails did not deliver the intense, lingering impact I sought. The decision to forego a full webcam feed was crucial in preserving this effect.

- Integrating Sound: for each intensity level, I integrated different auditory effects. These sounds play a crucial role in immersing the user in the experience, with each intensity level featuring a sound that complements the visual elements.

- User Interactivity: the user’s position relative to the screen – left or right – changes the color of the boids, directly involving the user in the creation process. The intensity level selection further personalizes the experience, representing the varying nature of hallucinations among individuals.

if (_x + _width / 2 < width / 2) { // Face on the left half flockColor = color(255, 0, 0); // Red flock } else { // Face on the right half flockColor = color(100,100,100); // Black flock } // Run flock with determined color flock.run(flockColor);The embedded sketch doesn’t work here but here’s a link to the sketch.

Challenges Faced

- Integrating ml5.js for Face Detection: I initially faced numerous errors in implementing face detection using the ml5.js library.

- Pixel Manipulation for Facial Distortion: Having no prior background in pixel manipulation, this aspect of the project was both challenging and fun.

- Optimization Issues:

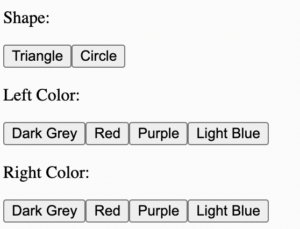

- In earlier iterations, I aimed to allow users to control the colors and shapes of the boids. However, this significantly impacted the sketch’s performance, resulting in a heavy lag.

I tried various approaches to mitigate this:

I tried various approaches to mitigate this:

- Reducing Color Options: Initially, I reduced the range of colors available for selection, hoping that fewer options would ease the load. The sketch was still not performing optimally.

- Limiting User Control: I then experimented with allowing users to choose either the color or the shape of the boids, but not both. However, this didn’t help either.

- Decreasing the Number of Boids: I also experimented with reducing the number of boids. However, this approach had a downside; fewer boids meant less dynamic and complex flocking behavior. The interaction between boids influences their movement, and reducing their numbers took away from the visuals.

- I then decided to shift the focus from user-controlled aesthetics to user-selected intensity levels. This change allowed for a dynamic and engaging experience without lagging.

Aspects I’m Proud Of

- Successfully integrating ml5’s face detection elements

- Being able to distort the face by manipulating pixels

- Being able to change the colors of the boids by the user’s position on the screen

- Introducing interactivity by creating buttons that customize the user’s experience

- Remaining flexible and thinking of other alternative solutions when running into issues.

Future Improvements

Looking ahead, I aim to revisit the idea of user-selected colors and shapes for the boids. I believe that with further optimization and refinement, this feature could greatly enhance the interactivity and visual appeal of the project, making it an even more immersive experience.

I also plan to add a starter screen which will provide users with an introduction to the project, offering instructions and a description of what to expect. This would make the project more user-friendly. Due to time constraints, this feature couldn’t be included.

References

Hypnagogic Hallucinations: Unveiling the Mystery of Waking Dreams

https://www.verywellhealth.com/what-causes-sleep-related-hallucinations-3014744