Inspiration:

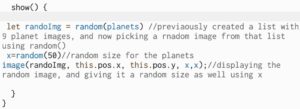

I have always been interested by programs that can locate certain areas of the body or face through advanced technology. Although it is a very critical process and it takes several trials and sample pictures to achieve, its results in a very exciting and rather interactive process.

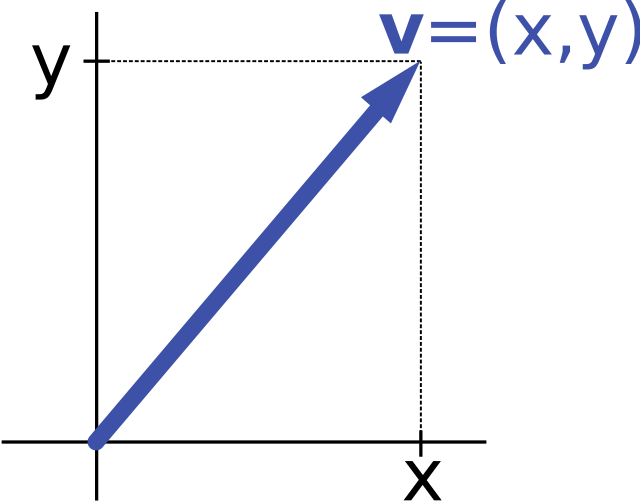

Although this concept is really interesting, what fascinated me even more is just how much we can do with these facial marker. What I found interesting was the ability to track vectors to mimic the facial movements, and to draw those vectors and represent over the camera. As I thought of these changes in the displacement and the x and y values of the vector I wondered how we can visualize this change, and that is when I thought of a simple graph used in physics and maths all the time, a Position vs. Time Graph!

Concept:

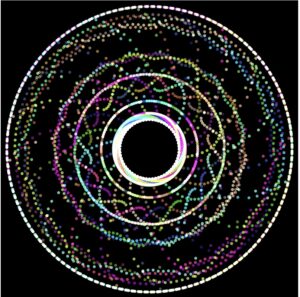

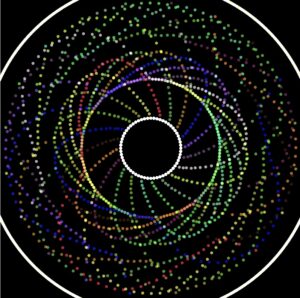

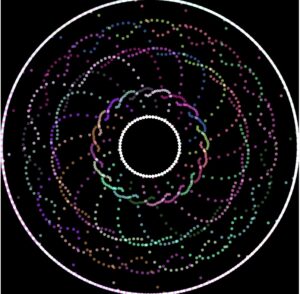

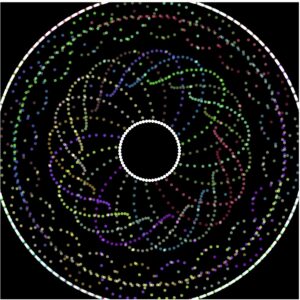

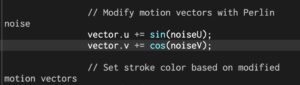

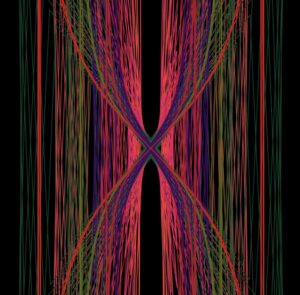

My program aims to use the p5.js library to capture video input, analyze optical flow, and visualize motion through graphical representations with the help of vectors. Mainly visualizing vertical and horizontal changes in the vectors. The flow file deals with detecting motion and optical flow, which are concepts beyond the scope of the class, but with the help of implementations I found online I integrated them in the flow.js file

The flow and its arguments returned are then used to draw motion vectors on the canvas, representing vertical and horizontal motion detected. In addition, the program instantiates two instances of the graph class to visualize the left-right (graph on top) and up-down (graph on the bottom) motion over time, creating trailing graphs that represent the history of the patterns. Throughout the draw loop, the motion vectors are modified with Perlin noise to introduce randomness and trigonometry as well.

How it Started:

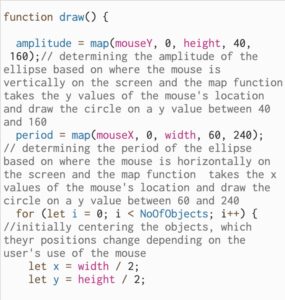

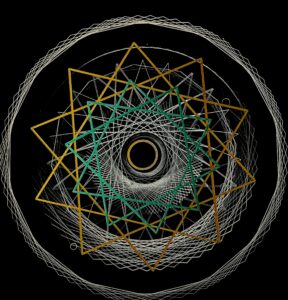

Initially I had a completely different idea in mind. I planned to take several of these quick sketches i implemented and have a program represent how words and actions can make a difference, and my initial sketches are shown bellow:

When looking at the pros and the cons of integrating all these sketches into one, I found that the cons were outweighing the pros. The positives of this idea is that it uses many concepts of the ideas we covered in class, from vectors, fractals, steering forces, perlin noise, particle system, and more.

However the biggest downside of this idea was although it ticked the boxes of integrating the material taught in class, it lacked visual aesthetics. Not only that, but p5.js usually lags and slows down when integrating too many things at once, especially if it includes user interactions.

Initially I took inspiration from the flow field code demonstration we had gone over in class that integrated perlin noise. I then integrated the webcam as my user interaction method. While working with displaying the vectors I went back to the perlin noise integration and decided to add that to the vectors to ensure a smoother transition when the vectors x and y components are changing.

Final Project: Stages

Stage 1: Camera Input

https://editor.p5js.org/ea2749/full/W9QWMntNK

Stage 2: Integrating flow field and Perlin Noise

https://editor.p5js.org/ea2749/full/aTHHs17Mj

Stage3: RGB dependencies on vector’s x and y

https://editor.p5js.org/ea2749/full/hrdedXJrD

Stage 4: Graphical Representation& Final Tocuches

https://editor.p5js.org/ea2749/full/2-pGvXr8o

Tackling Challenges:

The main challenge I faced was trying to understand the user interaction methods I wanted to use. Since I wanted to explore outside my comfort zone, I had to try new types of user interactions besides the mouse and keyboard, so I decided I wanted to use the webcam

![]()

While using the camera, I found ways online in which we can get these facial points, however, translating the returned values of these methods and programs that help us navigate such interactions into arguments I can use similar to our vector implementation in class was difficult. When dealing with user interaction methods like these, we don’t always get values or return types that are flexible to use with any method or class, so modifying, simplifying the code, using chat GPT, and trials and errors helped.

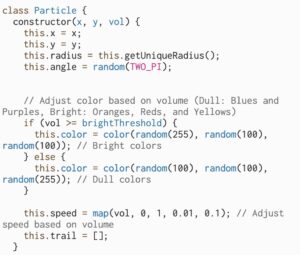

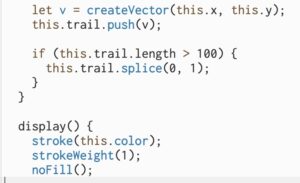

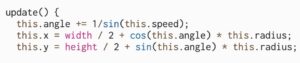

Snippets of Code:

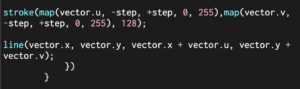

Displaying Vectors and Using them as Arguments:

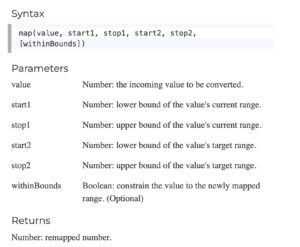

Vectors were represented by lines, taking the x and y values of the vector as arguments for the line. As for the color, we use the map function to take a value from the horizontal/verticle component of the vector and translate it to a value from 0 to 255 so we can use them as our RGB values. Notice how the colors will only change if the vectors horizontal/verticle component changes, meaning the colors change only when movement is detected.

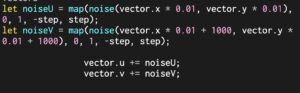

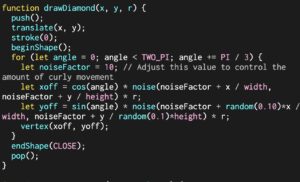

Using Perlin Noise:

Using perlin noise to achieve a smoother transition between the movement of the vectors and also using the map function to translate the values into smaller values that will make the noise function more seamless and smooth.

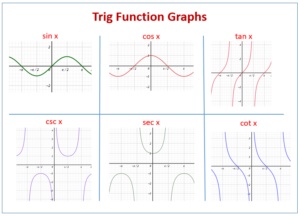

Using Trigonometry:

Using trigonometry specifically sin and cosine to limit the incrementing of the vertical and horizontal component of the vector from [-1,1]. This limits our incrementing even more making the transitions even more smooth and seamless.

FINAL Product:

https://editor.p5js.org/ea2749/full/2-pGvXr8o

Reflection and Areas of Improvement:

To conclude this project, and this semester, I really enjoyed the process of developing this project mostly because I got to learn so many concepts of user interaction, and I also was able to integrate concepts taught in class such as vectors, perlin noise, and trigonometry.

To further advance this project, I plan to find other ways to make this project more interactive, perhaps creating more sensitivity to motion. Or perhaps having the vectors react differently based on the extent of motion. I also think the project could have been better if I used objects from the physics libraries instead of representing the vectors as lines.

The IM ShowCase:

:max_bytes(150000):strip_icc()/GettyImages-855383234-1e3b71276a914df7940bf0a20d4524a0.jpg)