https://editor.p5js.org/oae233/sketches/0fc6TGzO4

Concept / Idea

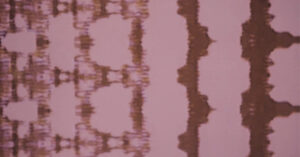

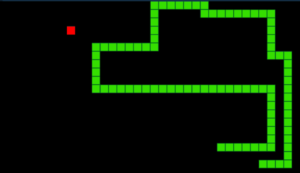

For this assignment, I had already done bird flocking for a previous assignment so I wanted to do something slightly different this time, but still try to capture the essence of watching some of the flocking behaviours in real life. I wanted to recreate the vibe of an underwater camera capturing the motion of fish flocking behavior, and how they reflect light as they move through water.

Implementation:

I wanted the fish to be 3d so I used a for loop that draws boxes consecutively with varying sizes using a sin wave, then used another sin wave to move these boxes from left to right, and another one with a longer wavelength for up and down movement, this gives me the movement of the fish swimming.

For the effect of depth in the water (fish further away from the camera are more blue and faded while closer fish are whiter and clearer) I drew planes at 5 percent opacity each and placed them spread out along the Z-axis. I’ve found that this successfully mimics the effect I was looking for.

Some code I want to highlight:

for (let i = -10; i < 10; i++){

let x = map(i,-10,10,0,PI);

let y = map(i,-10,10,0,TWO_PI);

this.z += 0.01;

this.changeInDir = p5.Vector.sub(this.initDir,this.vel);

this.changeInDir.normalize();

push();

this.changeInDir.mult(45);

angleMode(DEGREES);

rotateX(this.changeInDir.y);

rotateY(-this.changeInDir.x*2);

rotateZ(this.changeInDir.z);

angleMode(RADIANS);

translate(2*cos(y+this.z*3),5*cos(x+this.z/5),i*3);

strokeWeight(0.2);

fill(130);

specularMaterial(250);

box(10*sin(x),20*sin(x),3);

pop();

}

This code block is from the render() function of the boids/fish. You can see that I’m using the change in direction to rotate the fish’s body to face the direction it’s swimming in. It was challenging for me to figure this out exactly, especially in 3 dimensions. This setup I’ve found works best through some trial and error. It is not perfect though.

Future work:

I’d love to fix the issues with the fish rotating abruptly at some point. I’d also love to add more complex geometry to the fish body, add some variations between them, and increase the number of fish.