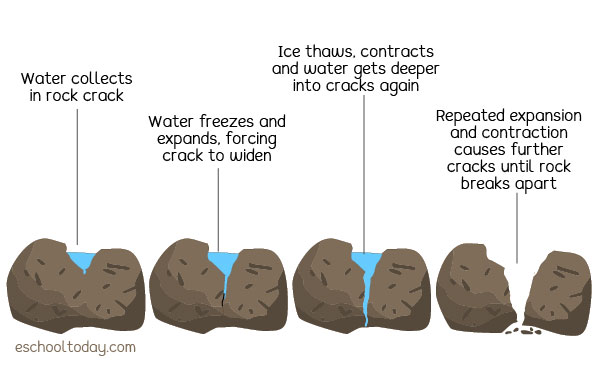

Concept

I want to make a tornado. A tornado is a violently rotating air column extending from a thunderstorm to the ground. A dark, greenish sky often portends it. Black storm clouds gather. Baseball-size hail may fall. A funnel suddenly appears, as though descending from a cloud. The funnel hits the ground and roars forward with a sound like that of a freight train approaching. The tornado tears up everything in its path [National Geographic].

Show Plan

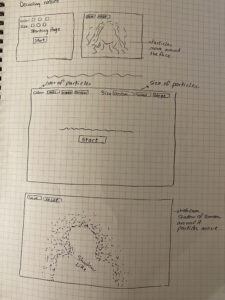

Assuming that we are going to use traditional tables, I wanted the final presentation to be like a science fair thing. The carton will hold all information regarding tornadoes, mitigation, and things everyone needs to know about it. In this case, I’ll use a carton and fit information inside (paper cut-out, etc.) and then have my p5.js there to be ‘scannable’.

My main attraction would be to use AR technologies. Yes, having users scan a QR code, would attract them (mwahaha).

Technicality

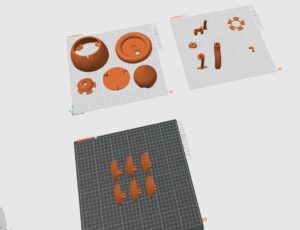

I am using a p5.js plugin called simpleAR, which allows the digital sketch to be projected into a ‘marker’. This plugin was made by Tetunori. And given this medium, I wanted to push, nudge, and slap myself a bit harder this time by working on a three-dimensional workspace using WebGL.

The tornado is made out of particle systems with a custom force that simulates vortex. My plan for the interaction is simple: as the user swipes the tornado, it spins faster and creates a bigger, faster, scarier tornado.

Prototypes

Philosophy, Why, and Passion

Humans have always feared nature. Powerless against its judgment against our kind. But beginning after the Industrial Revolution, we became a force more destructive than nature itself–we are the Anthropocene.

Milton, Sharjah, Sumedang, and other places around the globe. As an effort to spread awareness regarding climate change, I created this final project. I am not a policymaker, nor an environmental expert. But if there is anything I can do to help our home, this is the least I could do.

Challenges I Found (so far!)

📸As you might have noticed from the prototype demo, I am displaying my canvas without any background. During the experiment, I found out that recent releases of the p5.js editor handle transparency in WebGL like water and oil-they don’t go well together. Using the clear() method, the behavior expected was to have no background. Instead, it did give a solid black background.

I spent meticulous hours trying to piece things together as to why the transparency did not work until I stumbled upon a forum question. Because by native the editor does not support transparent background anymore, I decided to switch my work to OpenProcessing since they handle WebGL differently, and most importantly, the clear() method works as intended.

🤌Design and Interactivity. I am conflicted on what to decide here: Should I make a ‘stylized’ tornado that does not follow its natural laws, or should I create a scaled simulation? How much does my interactivity count as ‘interactive’?

There are certain limitations within the AR library I am using. Particularly, there is no handshake between the screen and the canvas. What I mean by this is that my original plan was to use gestures as a way to interact with the tornado. But to do this is very hard because there is no communication between the screen touch and the canvas. So either I have to resort to buttons (which is meh) or do something else entirely.

DRAFT PROGRESS

After some thought and thinking, I finally decided to use the particle system tornado as the default base. For now, I added two new features:

a) Skyscrapers: Rectangles of various heights are now scattered across a plane. These skyscrapers have ‘hitbox’ that reacts to the tornado.

b) Tornado-skyscraper collision check: When the tornado collides with a rectangle, it will ‘consume’ them and increase the particle speed. To implement this feature, I introduced speedmultiplier variable.

update(speedMultiplier) {

// Increment the angular position to simulate swirling

this.angle += (0.02 + noise(this.noiseOffset) * 0.02) * speedMultiplier;

// Update the radius slightly with Perlin noise for organic motion

this.radius += map(noise(this.noiseOffset), 0, 1, -1, 1) * speedMultiplier;

// Update height with sinusoidal oscillation (independent of speedMultiplier)

this.pos.y += sin(frameCount * 0.01) * 0.5;

// Wrap height to loop the tornado

if (this.pos.y > 300) {

this.pos.y = 0;

this.radius = map(this.pos.y, 0, 300, 100, 10); // Reset radius

}

// Update the x and z coordinates based on the angle and radius

this.pos.x = this.radius * cos(this.angle);

this.pos.z = this.radius * sin(this.angle);

this.noiseOffset += 0.01 * speedMultiplier;

}

What is left:

▶️Visuality: Improve the visual aspects of the sketch

▶️AR Port: Port the features into AR and move the tornado based on the device’s motion.

🛠️Fix the tornado going upwards whenever it reaches a certain speed. Mostly due to the speed multiplier and particle reacting together.

Resources Used

Stylized Tornado – Aexoa_Uen

Tornado Simulation – Emmetdj

EasyCam – James Dunn

Sharjah Tornado

Sumedang, Indonesia Tornado